A significant advancement in AI language technology emerges with the introduction of Stable LM 2 12B, the newest addition to the Stable LM 2 series. Developed by Stability.ai, Stable LM 2 12B represents a powerful leap forward in language modeling, boasting a pair of models with 12 billion parameters trained on a comprehensive multilingual dataset spanning English, Spanish, German, Italian, French, Portuguese, and Dutch.

Key highlights of Stable LM 2 12B include:

- Multilingual Proficiency: Engineered to excel in a multilingual context, Stable LM 2 12B is adept at handling tasks across a diverse array of languages with efficiency and precision.

- Versatile Variants: The release encompasses both a base model and an instruction-tuned variant, providing developers with adaptable options tailored to their specific needs.

- Accessibility for Testing: Developers can now put both models to the test on Hugging Face, whether for non-commercial endeavors or through a Stability AI Membership for commercial utilization.

- Enhanced Conversational Skills: Furthermore, this release incorporates updates to Stable LM 2 1.6B, augmenting its conversational prowess and functionality across the seven supported languages.

Stable LM 2 12B is built upon the established Stable LM 2 1.6B framework, ensuring a harmonious balance of performance, efficiency, and memory requirements. This medium-sized model delivers robust performance without necessitating excessive computational resources, making it a valuable asset for developers seeking to innovate in AI language technology.

“We are excited to introduce Stable LM 2 12B as the latest milestone in our ongoing pursuit to push the boundaries of language modeling,” at Stability.ai. “With its robust multilingual capabilities and versatile variants, Stable LM 2 12B opens up new avenues for developers and businesses alike.”

Stable LM 2 12B has undergone rigorous testing against other leading language models, showcasing solid performance across various benchmarks, including zero-shot and few-shot tasks. Its release signifies a substantial expansion of the Stable LM 2 family into the 12B category, reaffirming our dedication to providing open and transparent models without compromising on power and accuracy.

Performance

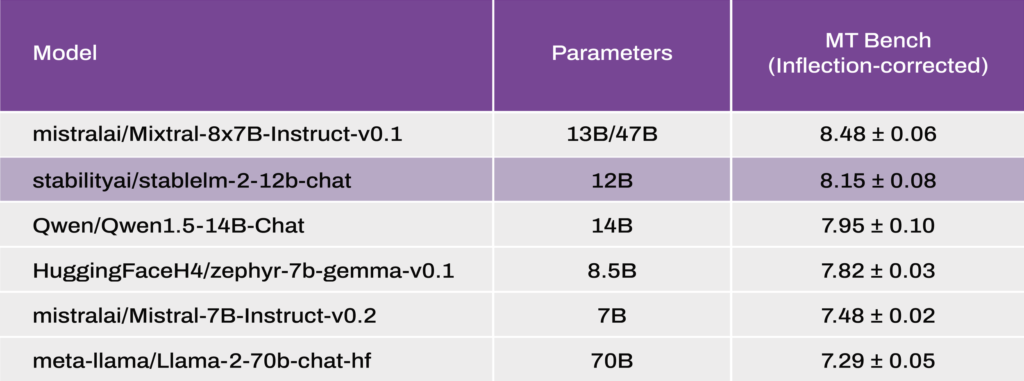

We compare Stable LM 2 12B to other popular strong language models such as Mixtral (MoE, 13B active parameters out of 47B in total), Llama2 (13B & 70B), Qwen 1.5 (14B), Gemma (8.5B), and Mistral (7B). As shown below, the new Stable LM 2 12B offers solid performance when tested on zero-shot and few-shot tasks across general benchmarks outlined in the Open LLM Leaderboard and (the newly corrected) MT-Bench.

MT Bench (Inflection-corrected)

MT Bench (Inflection-corrected)

Open LLM Leaderboard (instruct models)

Open LLM Leaderboard (instruct models) Open LLM Leaderboard (Base models)

Open LLM Leaderboard (Base models) 0-Shot NLP Tasks (Base Models)

0-Shot NLP Tasks (Base Models)With this new release, we extend the StableLM 2 family of models into the 12B category, providing an open and transparent model that makes no compromise on power and accuracy. We are confident this new release will enable developers and businesses to continue developing the future while retaining full control over their data.