Custom silicon is key in the race for faster, smarter, and more efficient AI systems. Google’s strategy centers around a new AI chip named Opal. This chip is designed to drive the next era of generative AI. Google is meeting the growing demands on large language models (LLMs) like Gemini. They are focusing on infrastructure. This means changing both the software and the hardware that powers it.

Tech leaders and decision-makers need to understand how Opal works for large-scale AI infrastructure. This isn’t just a technical overview; it’s a glimpse into the future of AI. Opal provides insights on AI inference, training efficiency, and enterprise-scale deployment.

Why Google Built Opal

For years, AI hardware has been all about graphics processing units (GPUs). NVIDIA has been the leader in this field. While GPUs are versatile and powerful, they weren’t designed with LLMs in mind. As generative AI systems became more complex and popular, Google noticed the limits of standard chips. So, they made a smart change.

Meet Google Opal, a new custom AI accelerator. It’s built to manage the performance and scale needed for Google’s Gemini models. Opal is different from traditional GPUs. It’s built specifically for large transformer-based models. This design cuts down latency and boosts throughput during inference.

Where GPUs are generalists, Opal is a specialist. Its design emphasizes parallelism and efficient tensor computation. This helps Google manage power better. It allows control over performance per watt and reduces inference costs. These two metrics are crucial for enterprise AI deployments.

Gemini Models and Opal

Google’s Gemini models, like Gemini 1.5, are among the boldest efforts in generative AI so far. These multimodal models can process large amounts of text, code, images, and more. But with great capability comes great computational demand.

That’s where Opal plays a critical role. Opal is the key inference engine for Gemini models. It helps them run efficiently at scale on Google’s AI infrastructure. Google combined Gemini and Opal chips. This creates a complete AI system. It goes from data centers to silicon to model runtime. This setup is much faster than traditional AI serving systems.

Google says early tests show Opal can double the performance of the last generation of inference hardware. According to Google, early deployments show that Opal can achieve up to 2X performance improvements compared to the previous generation of inference hardware. This means quicker responses, more users at once, and lower costs. These are vital benefits in business settings, where every millisecond and dollar matters.

What Makes Opal Different

Opal isn’t just another chip, it’s a statement of architectural intent. Google hasn’t shared all the technical specs, but it has revealed some key design principles. These principles show how Opal gains its advantage.

Opal works closely with Google’s Tensor Processing Unit (TPU) system. It also has important upgrades for inference. Inference is different from training. Training uses large matrix operations and backpropagation. In contrast, inference needs quick responses and efficient memory access. Opal is designed for this. It has high-bandwidth memory channels and on-chip caching. Also, it offers fine-tuned quantization support. This setup balances model accuracy and performance.

Second, Opal is designed to be energy-efficient at scale. Google reports that its data centers deliver nearly 1.4 times more performance per watt than the industry average. Google has always focused on sustainability in its data centers. Opal helps this goal by providing better performance-per-watt than standard accelerators. This efficiency cuts power use and enables denser compute clusters. It’s a win for both hyperscalers and cloud-native AI companies.

Finally, Opal is built with multi-tenancy and workload flexibility in mind. In Google Cloud, AI services support many clients with different needs. Opal helps by balancing workloads and quickly providing resources. This is perfect for inference tasks in areas like healthcare, finance, and cybersecurity. In these fields, uptime, accuracy, and security are crucial.

Why Custom Silicon Matters for Generative AI

In today’s AI world, performance goes beyond just raw computing power. It’s also about smart optimization for specific tasks. Custom chips, like Opal, help AI companies adjust their hardware for specific tasks. This leads to better performance than what general-purpose processors can provide.

Running an LLM like Gemini on a standard GPU stack can cause serious slowdowns. This is especially true when handling long input sequences or multimodal prompts. These models need fast processing and also smart memory use. They require good context window management and optimized attention mechanisms. Opal is built to speed up all of these tasks.

Moreover, custom silicon enables rapid iteration cycles. Google can adjust its models and hardware together. Improvements in Gemini architecture lead to better chip performance. This kind of co-design is virtually impossible with third-party hardware.

From a business perspective, this leads to faster time-to-market, improved inference throughput, and reduced total cost of ownership (TCO). These three factors are key for companies planning their AI deployment strategy.

How Opal Stacks Up

Companies like NVIDIA often grab the AI chip spotlight. But Google’s Opal provides the tech giant with a quiet yet smart advantage. Unlike its rivals, Google is not selling chips, it’s selling a performance stack. Companies using Google Cloud AI services aren’t just getting computing power. They gain access to a special infrastructure made for real-world generative AI tasks.

This is important for groups looking into private LLM use, safe data handling, and tailored model inference. Opal-backed infrastructure helps Google ensure performance SLAs. It also separates workloads more effectively. Plus, it offers various pricing models for apps that need heavy inference. According to a 2024 McKinsey report, companies deploying AI at scale could see up to a 20–30% reduction in operational costs. That makes Opal’s efficiency gains especially appealing to budget-conscious CIOs.

A recent post on the Google Developers Blog reveals that Opal is used in many Google services. These include Search, Bard, and Workspace AI tools. This proves that the chip is not just a theory; it has been tested globally. This aligns with Gartner’s forecast that AI software revenue will reach US$ 297.9 billion by 2027, further highlighting the importance of scalable infrastructure.

Also Read: Data Processing at Scale: Overcoming the Computational Challenges of Large Quantitative Models

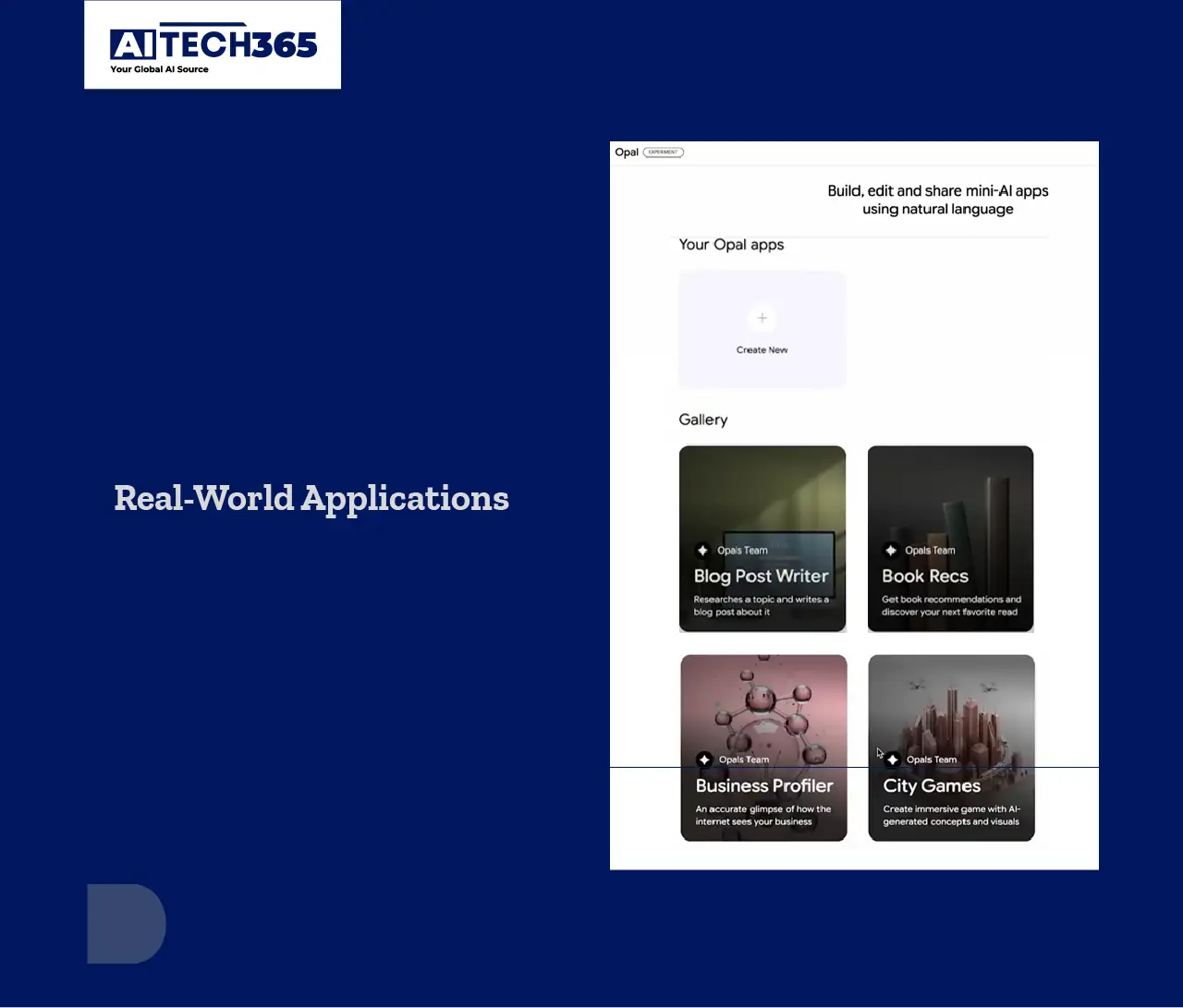

Real-World Applications

For enterprise leaders, the real question is: how does Opal impact business outcomes? The answer lies in use-case acceleration. Consider the following examples:

Generative AI models can help retail with:

- Real-time product recommendations

- Content generation

- Automating customer service

Opal-accelerated inference allows these tasks to be done in under a second. This makes interactions feel natural and quick.

Opal helps healthcare professionals quickly analyze unstructured clinical data and medical documents. It ensures strict data handling guarantees. This can greatly cut wait times for diagnostic insights. It also keeps data protection laws in check.

In financial services, firms use generative models for:

- Risk analysis

- Fraud detection

- Client communications

They benefit from Opal’s fast, scalable inference pipelines. This helps them make quick decisions, which is crucial in a fast-paced industry.

Strategic Implications for Decision-Makers

For CIOs, CTOs, and infrastructure leaders, Google Opal is more than just a new chip. It marks a big change in how AI systems will be created and run in the future. Generative models are getting more complex. Traditional computing solutions will have a hard time keeping up. AI infrastructure investors should look for platforms that offer compute power and precision.

By choosing platforms like Google Cloud’s AI stack, businesses can use Opal. Opal is designed for generative AI at scale. It also protects investments by ensuring they work with future Gemini versions. These versions will likely expand model size, modality, and reasoning abilities even more.

As LLMs go from testing to production, inference performance will matter a lot for competition. And in that race, Opal is already changing the rules.

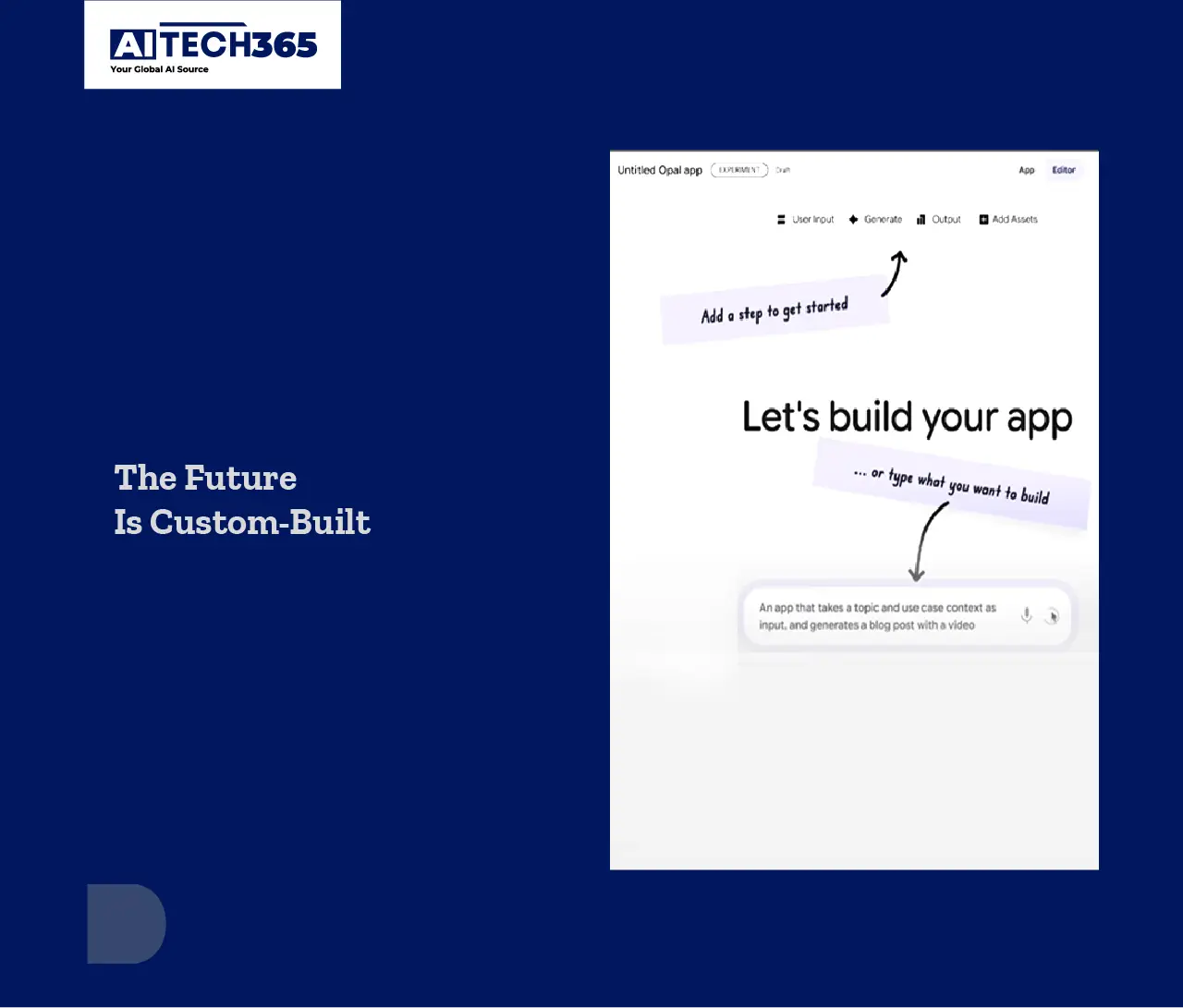

The Future Is Custom-Built

Generative AI needs a new infrastructure. This infrastructure must be fine-tuned and tightly integrated. It should also be optimized for performance, scalability, and energy efficiency. Google Opal is a leading example of this vision in action.

Google is building custom chips for its Gemini models. This addresses today’s inference challenges. It also gets us ready for a new type of AI-native infrastructure. Opal guides decision-makers in AI investments. It’s quick, focused, and made for the generative era.

Generative AI is changing industries like media and medicine. In this shift, infrastructure will set companies apart. And with Opal, Google has made it clear, it plans to lead not just in models, but in the very machines that run them.