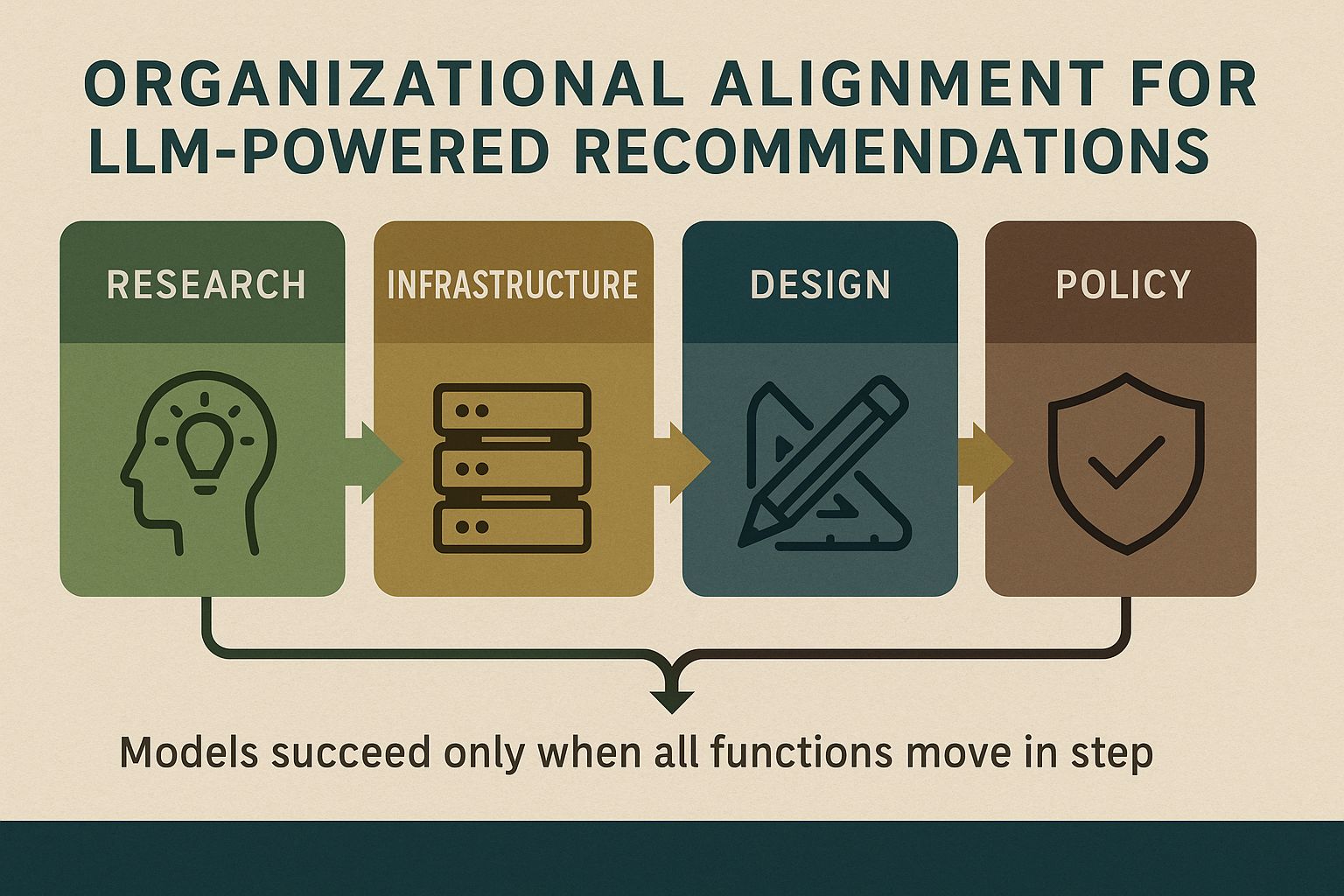

Integrating LLMs into recommendation engines sits at the intersection of product management and machine learning. In my view, success begins with organizational alignment, because models perform only when research, infrastructure, design, and policy move in step. From my experience, the most durable results come from a hybrid approach that places LLMs where semantics and context matter, while classical systems handle retrieval and scale. This column explains how to choose that mix, where LLMs fit in the stack, which signals and metrics matter beyond click-through, and which guardrails sustain trust and inclusion. Drawing on lessons from Watch Party, Instagram Trends, and Groups You Should Join, it presents a repeatable approach to shipping LLM features at scale. The objective is to create an engaging user experience with culturally relevant personalization that achieves cost and latency objectives. Take a closer look at if this aligns with your roadmap.

Product management best practices for LLM-driven products

Shipping LLM-driven features is as much an organizational as a technical challenge. The key is cross-functional alignment: researchers, infrastructure teams, designers, and policy must be aligned from day one. Product strategy should be iterative: begin with lightweight semantic enrichment, measure incremental lift, then scale into deeper integrations. In my role, I balance three dimensions: technical feasibility, user value, and business ROI. I define non-negotiables for each: latency ceilings, trust guardrails, and incremental engagement gains. Then I apply an iterative framework (hypothesis, lightweight test, cultural validation) before full rollout. This helps us ship LLM-powered features that resonate and scale sustainably.

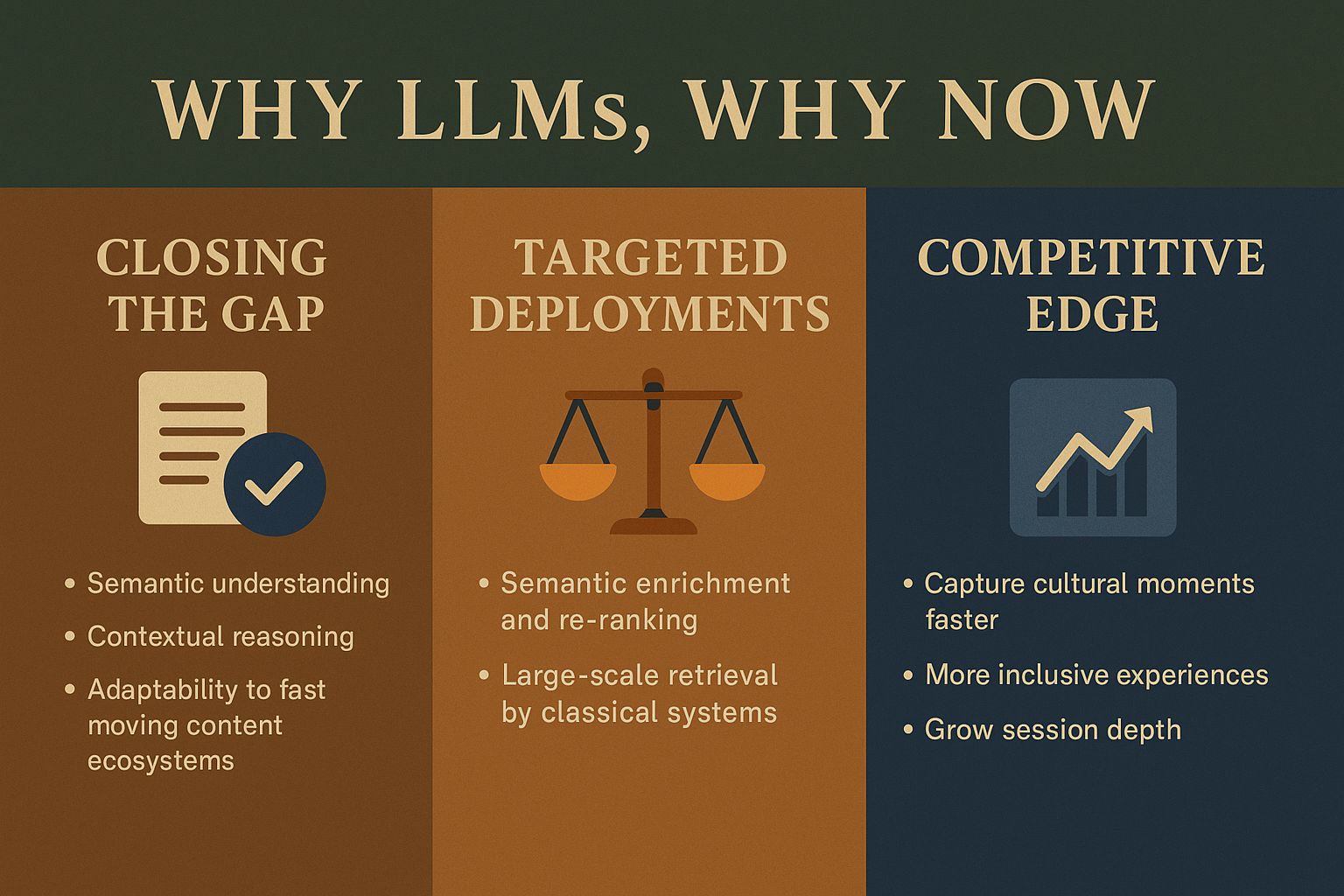

Why LLMs, why now

Classic recommenders, including collaborative filtering and gradient-boosted trees, excel at recognizing patterns in structured signals such as clicks, views, and co-engagement. They struggle to explain why users engage or interpret unstructured signals such as text, memes, or emerging cultural references. LLMs close this gap by adding semantic understanding, contextual reasoning, and adaptability to fast-moving content ecosystems. LLMs deepen sessions by connecting users to nuanced interests (for example, retro Bollywood dance reels) that co-engagement alone would miss. They increase long-term retention by making cold-start recommendations more accurate and culturally relevant. LLMs need not replace the full stack. ROI comes from targeted deployments: use LLMs for semantic enrichment and re-ranking while relying on efficient classical systems for large-scale retrieval. For executives, the case is simple: capture emerging cultural moments faster than competitors, serve more inclusive experiences across languages and cohorts, and grow session depth while meeting cost and latency targets.

Choosing the right tool: LLM, classic, or hybrid

The decision is about alignment, not a binary choice. When you have high-volume, well-labeled behavioral data and strict SLAs, classical recommenders are hard to beat on scale and cost. When the challenge involves messy, unstructured, or fast-evolving data, such as parsing free-text intent or detecting emerging trends, LLMs add unique value. In practice, the strongest systems are hybrid by design. Use classical models for retrieval and candidate generation, and apply LLMs where semantics matter most: cold start, contextual personalization, and nuanced similarity modeling between content and user interests. This balance yields cultural relevance and richer personalization while preserving speed and efficiency.

Also Read: No-Code AI: A Beginner’s Guide for 2024

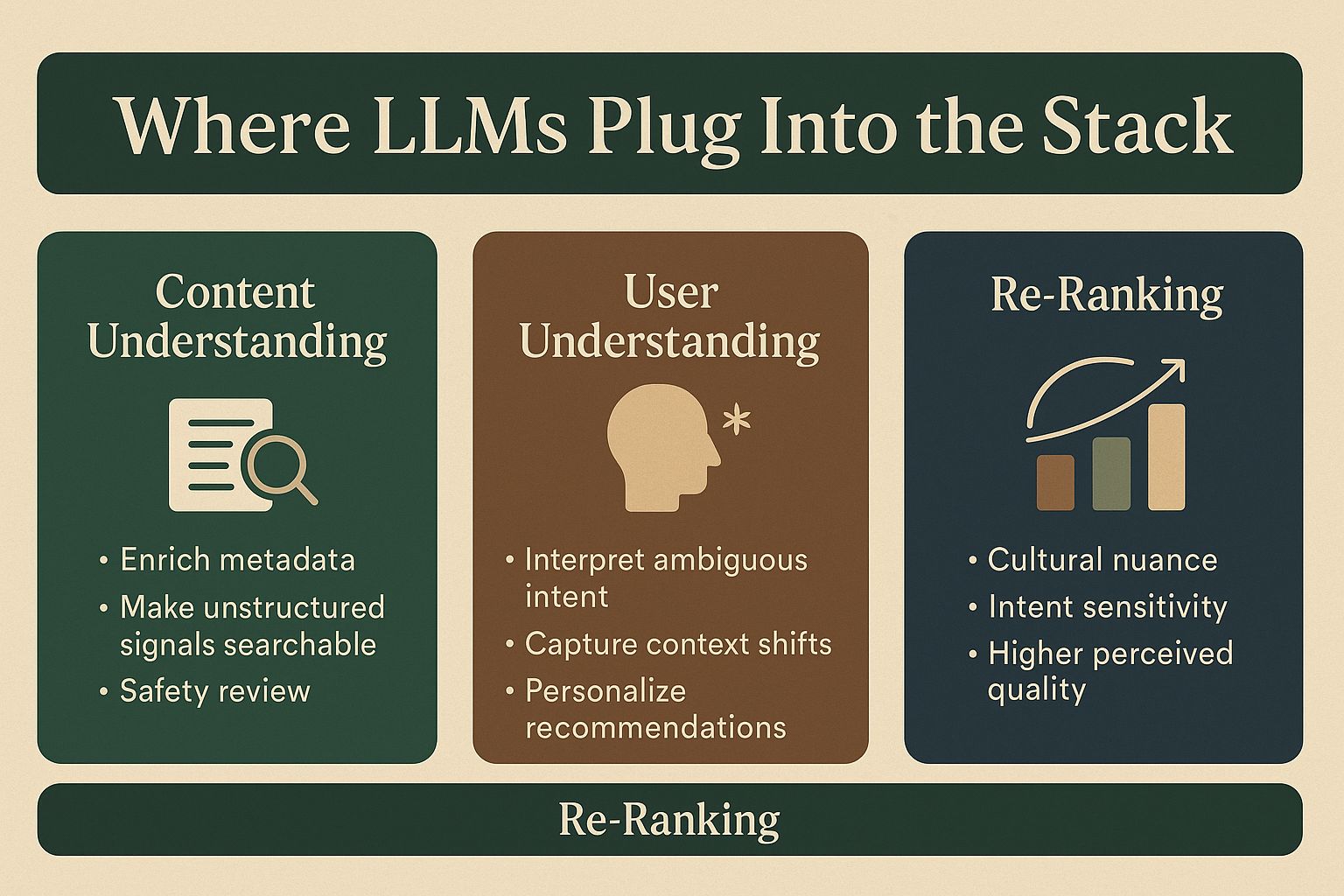

Where LLMs plug into the stack

LLMs expand both sides of the recommendation equation: content understanding and user understanding. On the content side, they enrich metadata and make unstructured signals such as captions, memes, and dialogue searchable and safety-reviewed. On the user side, they interpret ambiguous intent. For example, a user exploring “wellness” may mean fitness one week and mental health the next. The highest-impact insertion points are candidate enrichment and re-ranking. Retrieval is best served by lightweight embeddings, while re-ranking is where cultural nuance and intent sensitivity raise perceived quality. Let classical systems cast a wide net and use LLMs to sharpen the signal.

Cultural relevance by design

Products become cultural when they not only reflect interests but also shape them. LLMs accelerate this by identifying authentic signals buried in noisy ecosystems, such as what is resonating in Lagos today and what high schoolers in California are remixing tomorrow. They help capture microtrends before they reach the mainstream. The key is to avoid oversteering. If we push too hard, the ecosystem loses authenticity and trust. The balance comes from pairing novelty detection with governance, so surfaced trends favor authentic engagement over manufactured virality. Applied well, LLMs make platforms feel alive, diverse, and trustworthy at once.

Measuring impact and governing risk

Click-through rate is a shallow north-star metric. For long-term health, we need metrics that capture qualified engagement: session depth, user satisfaction, return frequency, and content diversity. These metrics indicate whether recommendations create durable value rather than optimizing short-term clicks. Fairness and inclusion are non-negotiable. Monitor performance across languages, regions, and underserved cohorts. If models underperform for any community, cultural credibility erodes. On the safety side, guardrails such as on-device inference, federated learning, and automated abuse detection preserve trust. Scale without trust becomes churn.

Execution and field lessons

At Prime Video, building motivation-based discovery taught me that user intent in entertainment is highly contextual. Sometimes audiences seek comfort; other times they seek novelty. That framework shaped my approach to real-time experiences like Watch Party, where synchronization and rights constraints made intent parsing and timing critical. At Instagram, launching Trends meant separating signal from noise. Authenticity is defined less by raw volume and more by how communities remix and sustain content. That lesson is directly relevant to LLMs today. Models must capture cultural context as well as data scale.