Most AI teams start with the model. I recommend starting with the decision instead. The shift may seem cosmetic, yet it isn’t. It reshapes what you build, how you evaluate progress, and whether the product earns trust and real use. I learned this across roles: as CTO and co-founder at Fluence, where we built multi-agent AI for personalized sales outreach; as CEO at Seon Health/KulaMind, building AI assist for mental-health workflows; and during the Antler residency, where founders move from idea to evidence in weeks. Every time I forced myself to define the specific decision the product must improve, we moved faster and shipped better. Let’s dive deeper into the pattern: Problem → Decision Owner → Measurable “Better” → Data You Actually Have → Smallest Loop to Prove Lift.

Don’t say “problem,” say “decision”

AI creates value where a human repeatedly faces uncertainty and must choose. Build the bridge explicitly: write the problem, then restate it as a single “Should we…?” decision that a specific owner must make at a specific moment with real stakes.

In outbound sales, instead of “write better emails with AI,” ask: Should this rep contact this prospect now, and if yes, with what angle and what proof? That reframing forced us to care about timing, persona, and evidence, not just text generation.

For product flows, instead of “process the data,” ask: Given this context, what should we do next for this user to move them forward recommend, route, or reassure? The product became next-step guidance rather than a generic summary.

If you can’t compress the problem into one “Should we…?” line, you’re not ready to pick a model. The model is an implementation detail; the decision is the product.

Decisions have owners. Design for their reality

Every decision has an owner. Incentives, risk tolerance, tools, and time all sit with that person. Center them. In B2B sales, the owner is the rep (and, secondarily, the manager).

They care about qualified replies and booked meetings, not paragraphs that merely sound good. They live in the CRM and inbox. They’ll accept suggestions if they can keep their voice and move faster. In clinical settings, the owner is the licensed clinician. They care about patient safety, standards of care, consent, and documentation. “Assist” is welcome; “override” is not. Auditability is non-negotiable. Those owners drove our design choices.

At Seon Health, we built in-workflow guidance with consent controls, audit trails, and clinician override.

At Fluence, we built agentic drafts with one-click rationales and fast editing inside the sales stack. Same engineering talent, very different products because the owners were different. If your design could be adopted without changing the behavior of a real owner, you likely picked the wrong decision or the wrong owner.

Measure what matters: one primary, two secondaries

Choose one primary outcome that matches the owner’s reality, then two secondaries to keep it honest.

At Fluence, our primary was qualified reply rate. Secondaries were booked meetings and time to first meaningful reply. We scored reply quality with a rubric built with managers, because raw reply count can reward spam.

At Seon Health, the primary was clinician-rated usefulness at point of care. Secondaries were adherence to the chosen modality or protocol, and session efficiency – how quickly we reached a concrete next step. Accuracy mattered, but usefulness and safety mattered more.

Also define harm metrics. In sales, track reputation risk and spam signals. In mental health, track over-reliance, misclassification risk, and privacy failures. You cannot claim “better” if you don’t measure what “worse” looks like.

If your metric rises while the owner’s experience deteriorates, the metric is wrong.

Also Read: From Correlation to Context: A PM’s Playbook for LLM-Driven Recommendations

Data first: sources, labels, splits, safety

Most teams write a model spec and treat data as an afterthought. That’s how you lose months. I write a data PRD first:

- Sources: What exists today (CRM events, emails, calendars, notes; de-identified transcripts; clinician annotations)? What will we never get?

- Consent & governance: What’s allowed for training vs. inference vs. analytics? What needs redaction or on-device processing? Who sees raw vs. derived data?

- Labeling plan: Which labels map to “better,” and how do we collect them with lightweight, in-flow feedback from the owner?

- Versioning & eval splits: How do we ensure apples-to-apples comparisons across time, teams, and model versions?

- Privacy & safety: Could we produce a data lineage and PII map tomorrow that legal would sign off on?

At Seon Health, ethics and regulation pushed us toward privacy by design and on-the-record clinician metadata. At Fluence, commercial constraints pushed us to cleanly link CRM, calendar, and inbox so we could attribute outcomes to actions.

If your “available data” list is aspirational, your roadmap is fiction.

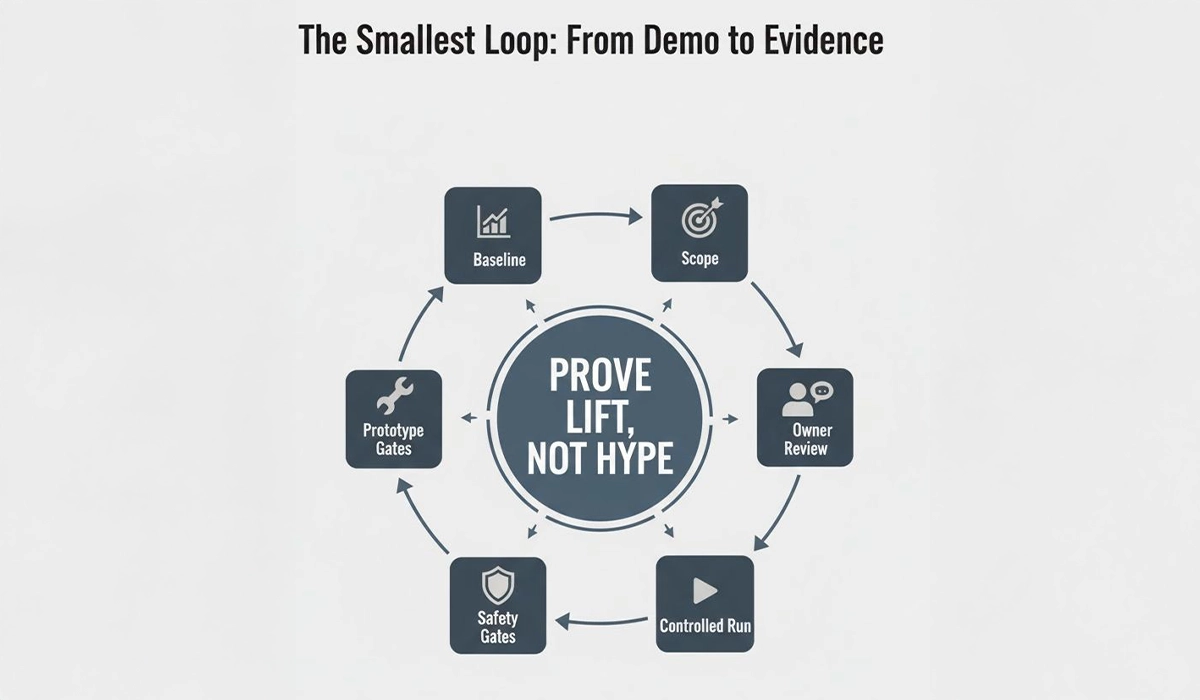

Smallest loop first: baseline, safety, controlled run

A slick demo feels like progress; evidence is progress. Run a compact, repeatable loop:

- Baseline: Measure current outcomes for a small, representative cohort. Fix definitions for “qualified” and for “harm.”

- Scope: Choose one decision moment and one persona. Define the assist (agent/model) and the human control points.

- In-workflow prototype: Meet the owner where they already work (CRM/inbox for reps; the clinical toolchain for clinicians). No side apps.

- Safety gates: Add policy tests, PII checks, prompt-injection defenses, constrained tool use, and explicit fallbacks (show nothing / require confirmation / degrade gracefully).

- Controlled run: Compare human-only vs. human+AI. Log primary/secondary outcomes plus time saved, edits per suggestion, and override reasons.

- Owner review: Sit with reps/clinicians. What helped decisions? What distracted or created risk?

- Decide: Iterate if the primary moves without raising harm; stop and document if it doesn’t.

- Publish: Share a short model card, data summary, and result snapshot. Reproducibility builds trust with leadership, customers, and investors.

One more rule from the trenches, and it’s blunt because it needs to be: If your startup hasn’t been able to launch in a month or two, it’s time to switch things up. Maybe the team doesn’t have the right skill set. Maybe leadership lacks a clear plan. Maybe you don’t know your users well enough to know what to build. Whatever the reason, address it now and make changes before you waste more time.

I learned early to sell before I build anything large. Selling the decision what will change for you tomorrow if this works? reduces noise, exposes missing skills, and forces clarity. It also creates urgency to fix the real bottleneck instead of polishing a demo.

Common pitfalls – and better defaults

- Model-first roadmaps. Start with “validate decision and owner.”

- Proxy metrics that lie. Anchor on outcomes the owner respects.

- Data wish-casting. Ship with the data you have; design the loop around it.

- Agent sprawl. Limit roles and handoffs; every tool adds a failure mode.

- No auditability. Store rationales and show them at the point of use.

Why this compounds: durable advantages beyond any model

Decision-first thinking compounds because it creates advantages that outlive any model choice. First, you gain workflow gravity you earn a seat where work already happens, so adoption grows organically instead of fighting for another tab. Second, you establish purposeful data rights you collect only what improves the specific decision, which simplifies consent, governance, and long-term stewardship. Third, you build trust capital — owners experience the product as an aid to judgment, not a replacement, and that credibility accelerates usage and expansion.

In sales teams, when contributors receive higher-quality replies with less effort and can trace the why adoption accelerates and leaders push for wider rollouts. In regulated clinical environments, keeping practitioners in control with auditability shifts the conversation from “Is AI safe?” to “Where is it useful?” And across founder cohorts and accelerator sprints, teams that frame their product around a concrete decision find truth faster: they either prove lift and raise conviction, or they sunset weak ideas before those ideas consume time and capital. Build models because you must, not because you can. Start with the decision, name the owner, define “better,” use the data you have, and prove lift in the smallest loop. That is how AI products escape the demo trap and become durable businesses.