Voice technology has reached a turning point. Until now, most voice assistants have focused on recognition and response. They can parse commands, answer questions, and execute tasks. What they have not done is understand how people feel while speaking. Emotion has largely remained outside the conversation.

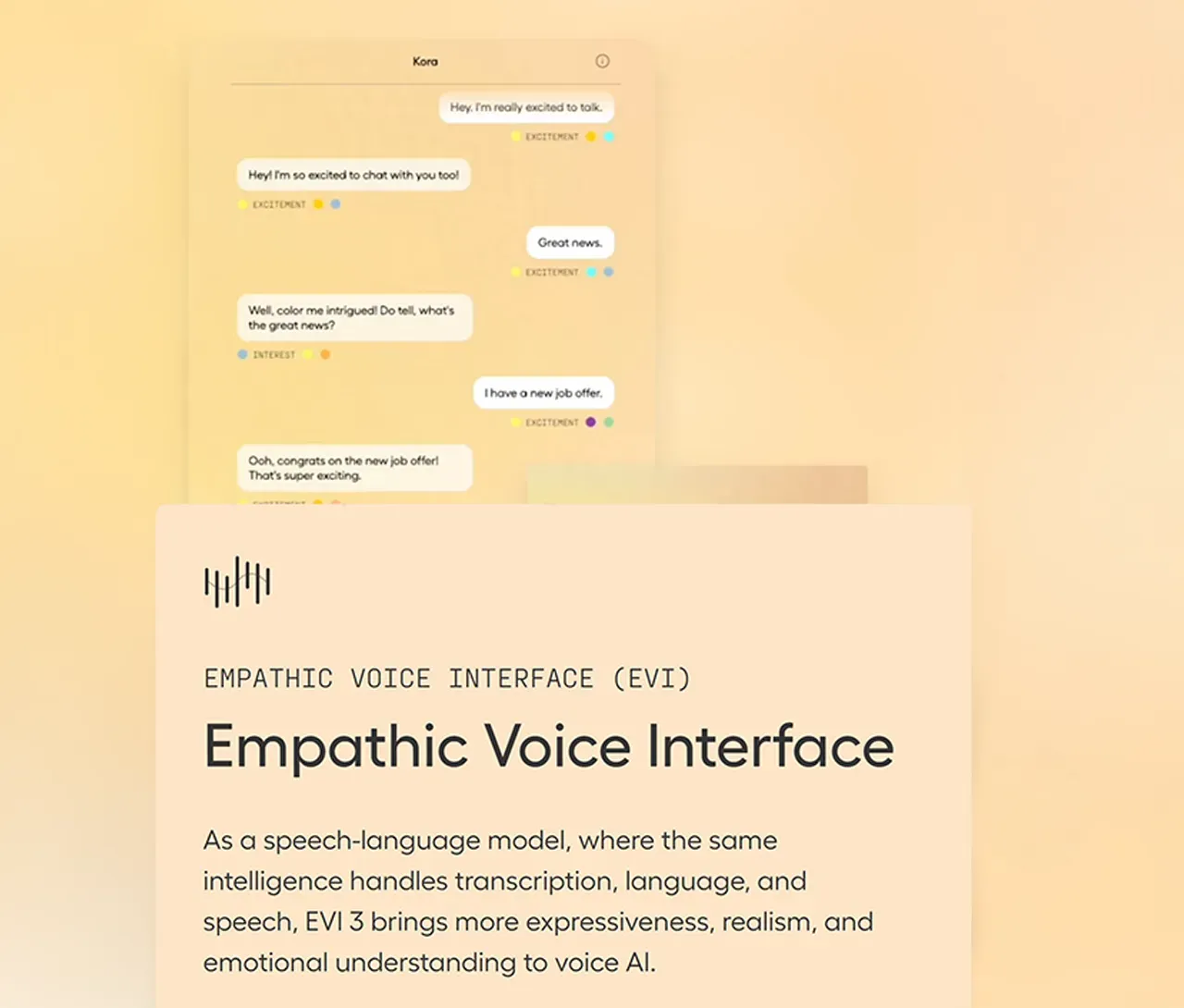

Hume AI is working to change that. Its latest voice assistant model, EVI-3, represents a new chapter in the evolution of human-computer interaction. Rather than focusing solely on what is said, EVI-3 listens for how it is said. It recognizes tone, pace, inflection, and subtle cues that reveal emotional context. This is more than a technical upgrade. It is a shift toward systems that engage not just with logic, but with empathy.

Emotionally intelligent voice assistants are not science fiction. They are being shaped right now, and their purpose goes beyond entertainment or productivity. These systems are designed to support more natural, more human conversations with technology. Hume.ai’s EVI 3 is not trying to replace emotional intelligence. It is trying to recognize it, respond to it, and reflect it in real time.

Moving Beyond Transactional Voice AI

Voice assistants today are transactional by design. They are built to answer questions, complete tasks, and return results. This makes them useful, but it also limits their impact. When every interaction is a command or query, the system becomes functional but not engaging.

EVI-3 takes a different approach. It is built to recognize emotional signals and use that awareness to guide its responses. If someone speaks with frustration, it adjusts its tone. If someone sounds uncertain, it provides reassurance. These shifts do not replace content. They support it, making interactions feel more natural and less robotic.

This kind of adjustment creates new possibilities. A healthcare assistant that senses stress in a patient’s voice can adapt its pacing or language. A customer support bot that hears confusion can slow down and clarify. A digital tutor can offer encouragement when it detects discouragement. These are not just interface improvements. They are meaningful changes in how machines participate in communication.

The Science Behind Emotionally Aware Systems

Building an emotionally intelligent voice assistant requires more than voice recognition. It requires a deep understanding of how people express emotion vocally. That includes not only words but vocal tone, cadence, rhythm, and variability. Every voice carries signals that go far beyond language.

Hume AI’s model is trained to detect those signals and interpret them within context. This is not simply about tagging a voice as happy or sad. It is about identifying a range of emotional states and adjusting system behavior accordingly. The system does not guess at feelings. It listens, analyzes, and responds based on structured models of human expression.

This effort also respects nuance. Not every raised voice is anger. Not every pause is hesitation. Emotion is layered, and understanding it requires more than surface-level cues. EVI-3 is trained on varied voice inputs, capturing differences across cultures, speaking styles, and communication settings. That depth is what makes the system adaptable and useful across different environments.

Responsiveness That Feels Human

One of the biggest shifts introduced by emotionally intelligent systems like EVI-3 is the sense of presence they provide. Traditional assistants operate in a linear flow. A user speaks. The assistant replies. The pattern continues until the task is complete. With EVI-3, the interaction becomes more fluid.

The system does not just wait for input. It listens actively. It senses when to pause, when to ask for clarification, or when to offer affirmation. These behaviors are not inserted randomly. They are grounded in real-time emotional inference.

For users, this feels different. It makes the assistant seem less mechanical and more engaged. It creates a rhythm that mirrors human conversation, where tone and pacing are as important as content.

This responsiveness also supports longer interactions. When a voice assistant reacts with emotional awareness, users are more likely to stay engaged. They are less likely to feel misunderstood or ignored. Over time, this builds a kind of trust that traditional systems do not create.

Also Read: Why Every Business Needs an Enterprise Virtual Assistant

Applications Across Industries

Emotionally intelligent voice assistants are not confined to one industry or use case. Their value is in their ability to adapt to context. That flexibility makes them relevant across sectors where communication matters.

In healthcare, voice systems can adjust their tone based on emotional cues. This enhances patient engagement, supports remote care, and improves understanding and adherence to care plans. In education, emotionally aware tutors can respond to how a learner feels. This helps build confidence and reduce anxiety during difficult moments. In customer service, voice systems that detect emotional intensity can shift tone to calm or connect with callers. While they don’t replace human agents, they make early interactions more effective.

In customer service, voice systems that recognize emotional intensity can de-escalate conversations or shift tone to meet the caller where they are emotionally. This does not replace human support. It enhances it by making initial interactions more productive.

Even in entertainment, emotionally aware voice assistants can create more immersive experiences. Games, interactive stories, and training simulations all benefit from systems that respond not only to what players do but to how they express themselves.

These use cases point to a future where emotion-aware AI becomes a standard part of digital experiences. Not as a gimmick, but as a layer of communication that makes technology easier to interact with.

Privacy, Boundaries, and Responsible Design

As voice assistants become more perceptive, concerns about privacy and ethical boundaries naturally follow. Emotional signals are deeply personal. They reveal information that may not be intentionally shared. This raises critical questions about how these systems collect, interpret, and act on emotional data.

EVI-3 has been developed with those concerns in mind. Emotion recognition is used to enhance communication, not to manipulate or profile. Systems like these must be transparent about what is being sensed, how it is processed, and how the information is used. Users should always remain in control of their data and their experience.

Responsible design also includes setting clear boundaries. Emotionally intelligent systems should be designed to support, not intrude. They should respond to emotional signals when they are part of the interaction, not when users are simply speaking near a device. Respecting that line is critical to maintaining trust.

For organizations deploying this technology, these considerations become part of the overall strategy. Emotional intelligence adds value only when it is implemented ethically. That means clear consent policies, robust data security, and ongoing review of how emotional data is handled in real-world use.

Designing for Empathy, Not Imitation

Emotionally intelligent systems are not trying to become human. They are not replicating emotion. They are learning to recognize it and respond in a way that supports communication. This distinction matters. It shapes how these systems are built, used, and evaluated.

Designing for empathy means focusing on the quality of the interaction, not the illusion of consciousness. EVI-3 does not simulate emotion. It models how to interpret and react to emotional context, making the assistant more helpful, more aware, and more responsive.

This approach avoids the risks that come with attempting to mimic human behavior too closely. It also keeps the focus on utility. Users are not asking for a voice assistant to feel. They are asking for it to listen and respond with greater care. That is where the value lies.

This design principle also supports long-term adoption. Emotion-aware systems that focus on empathy and responsiveness create a better user experience without stepping into areas that feel uncomfortable or artificial. They remain tools, not personalities.

The Evolution of Voice Technology

EVI-3 represents a step forward in a broader evolution. Voice interfaces are becoming more capable, more adaptive, and more human-centered. The early days of simple commands have given way to conversations. The next phase includes emotional understanding as a core feature. According to the State of AI report by McKinsey, as of 2024, 78% of organizations worldwide have integrated AI into at least one business function, up from 72% earlier that year and 55% the previous year.

This evolution reflects a deeper truth about technology. As it becomes more embedded in daily life, the expectations around how it behaves rise. People want tools that understand them, not just respond to them. They want experiences that feel less mechanical and more intuitive.

Emotionally intelligent voice assistants meet that expectation by adding a layer of insight to every interaction. They do not just complete tasks. They understand context. They respond to tone. They adapt in real time. These abilities bring voice technology closer to the natural rhythms of human communication.

For businesses, this shift creates new opportunities. It allows them to connect with users in a more meaningful way. It supports services that are not only functional but also supportive. And it opens the door to interactions that feel personalized, even when they are fully automated.

Future Possibilities and Considerations

The path ahead for emotionally intelligent voice assistants is full of possibilities. As models become more accurate and more context-aware, the range of use cases will grow. From real-time coaching to mental health support, from immersive learning to adaptive onboarding, the impact could be broad.

But progress will depend on balance. Advancing the technology must go hand in hand with protecting users. More capability must be matched with more transparency. Greater insight must be coupled with clear boundaries.

These systems will likely evolve alongside new standards, both technical and ethical. Industry collaboration will play a role. So will regulation. The goal is not just to build smarter systems, but to build ones that are safe, trusted, and useful.

As voice AI becomes more emotionally aware, it will challenge assumptions about what machines can do and how they should behave. The result will not be perfect empathy, but more thoughtful interaction. That alone could transform how people experience digital systems.

Conclusion

Hume.ai’s EVI 3 is more than a new model. It is a milestone of where voice technology is headed. Emotionally intelligent voice assistants are changing the dynamic between people and machines. By listening with greater nuance and responding with greater care, they create conversations that feel less artificial and more human.

This is not about making machines feel. It is about making them understand enough to respond effectively. That understanding, when used responsibly, can improve experiences, strengthen connections, and reshape how people interact with the systems around them.

Emotion-aware AI is not the endpoint. It is part of a broader movement toward technology that meets people where they are, not just in what they say, but in how they feel. That is the breakthrough EVI-3 represents. And it is only the beginning.